In this post, I outline when and how to use single imputation using an expectation-maximization algorithm in SPSS to deal with missing data. I start with a step-by-step tutorial on how to do this in SPSS, and finish with a discussion of some of the finer points of doing this analysis.

1. Open the data-file you want to work with.

2. Sort the data file by ascending ID or Participant number. This is critical; if you do not do this, everything you do subsequently could be inaccurate. To do this, right click on the ID column, and click “sort ascending”

3. Open the Syntax Editor in SPSS:

4. Copy and paste the following syntax into the Syntax Editor, adding in your own variables after MVA VARIABLES, and specifying a location on your computer after OUTFILE. .Also, note that .sav is the file extension for an SPSS file, so make sure it ends in that.

MVA VARIABLES=var1 var2 var3 var4 var5

/MPATTERN

/EM(TOLERANCE=0.001 CONVERGENCE=0.0001 ITERATIONS=100 OUTFILE=’C:\Users\Owner\Desktop\file1.sav’).

5. Highlight all the text in the syntax file, and click on the “run” button on the toolbar:

6. This will produce a rather large output file, but only a few things within are necessary for our purposes: (a) Little’s MCAR Test and (b) whether or not the analysis converged. Both can be found in the spot indicated in the picture below:

(a) If Little’s MCAR test is nonsignificant, this is a good thing! It means that your variables are missing Completely at Random (see #4 in FAQ).

(b) This second message is an error. It will only pop up if there is a problem. If you don’t find it at all in the output, it’s because everything is working properly. If this message DOES pop up, it means that the data imputation will be inaccurate. To fix it, increase the number of iterations specified in the syntax (e.g. try doubling it to 200 first). If that doesn’t work, try reducing the number of variables in your analysis.

9. The syntax you ran also saved a brand new datafile in a location you specified above. Open that datafile.

10. If everything went well, this new data file will have no missing data! (You can verify this for yourself by running analyzeàFrequencies on all your variables). However, the new datafile will ONLY contain the variables listed in the syntax above. If you want to have these variables in your master data file, you will have to merge the files together.

Merging the master file and the file created with EM above

11. In the data file created with the above syntax, rename every variable. Make it simple, something like the following syntax:

RENAME VARIABLES (var1 = var1_em).

You are doing this because you do not want to overwrite the raw data with missing values included in the master data file.

12. Next, add an ID number variable (representing the participant ID number) that will be identical to whatever is in your master file (including variable name!). You’ll need this later to merge the files. If you sorted correctly, you should be able to copy and paste it from the master file.

13. Make sure both the master data file and the new data file created with the above syntax are open at the same time. Make sure both files are sorted by ascending ID number, as described in step 2. I can’t stress this enough. Double check to make sure you have done this.

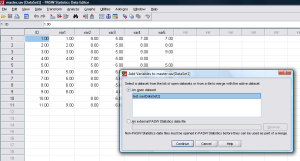

14. In the master file (not the smaller, newly-created file), Click on Data –> Merge Files –> Add Variables

15. Your new data set should be listed under “open datasets.” Click on it and press “continue”

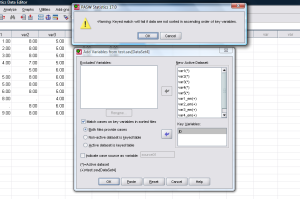

16. In the next screen, click “match cases on key variables in sorted files,” and “Both files provide cases.” Place “ID” (or whatever your participant ID number variable is) in the box “key variables.” Then click okay. You will get a warning message; if you sorted the data files by ID number as instructed, you may click “ok” again to bypass the warning message.

17. The process is complete! You now have a master dataset with a set of variables with the missing data replaced as well as the raw data with the missing data still included. This is valuable to make sure that you aren’t getting drastically different results between the imputed data and listwise deletion. When conducting your analyses, just make sure to use the variables that have no missing data!

FAQ

1. How does the EM Algorithm impute missing data?

Most of the texts on this topic are very complex and difficult to follow. After much searching on the web, I found a useful website which explains the conceptual ideas of EM in a very easy-to-understand format (http://www.psych-it.com.au/Psychlopedia/article.asp?id=267). So check this website out if you want to know what’s going on “under the hood.”

2. When should I use EM?

Generally speaking, multiple imputation (MI) and the full-maximum likelihood (FIML) methods are both less biased, and in the case of FIML, quicker to implement. Use those methods wherever possible. However, sometimes the EM approach is useful when you want to create a single dataset for exploratory analysis, and the amount of missing data is trivial. It’s also sometimes useful to overcome software limitations at the analysis stage. For example, bootstrapping cannot be performed in AMOS software with missing data using the default FIML approach. Moreover, there is often no agreed-upon way to combine results across multiply imputed datasets for many statistical tests. In both of these cases, a single imputation using EM may be helpful.

As a rule of thumb, only use EM when missing data are less than 5%. If you have more missing data than this, your results will be biased. Specifically, the standard errors will be too low, making your p-values too low (increasing Type I error).

3. Which variables should I include in my list when imputing data?

This is a tricky question. If you read tutorial on EM in #1 above, you will have an understanding that the EM algorithm imputes missing data by making a best estimate based on the available data. Long story short, if none of your variables are intercorrelated, you can’t make a good prediction using this method. Here are a few tips to improve the quality of the imputation:

a) Though it’s tempting to just throw in all of your variables, this isn’t usually the best approach. As a rule of thumb, do this only when you have 100 or fewer variables and a large sample size (Graham, 2009).

b) If you’re doing questionnaire research, it’s useful to impute data scale by scale. For instance, with an 8-item extraversion scale, run an analysis with just those 8 items. Then run a separate analysis for each questionnaire in a similar fashion. Merging the data files together will be more time-consuming, but it may provide more accurate imputations.

c) If you want to improve the imputation even further, add additional variables that you know are highly correlated (r > .50) with your questionnaire items of interest. For example, if you have longitudinal data where the same variable is measured multiple times, consider including the items from each wave of data when you’re imputing data. For instance, include the 10 items from time 1 depression and the 10 items from time 2 depression for a total of 20 items.

4. What does Little’s MCAR test tell us?

Missing data can be Missing Completely at Random (i.e., no discernible pattern to missingness), Missing at Random (i.e., missingness depends upon another observed variable), or Missing Not At Random (i.e., missingness is due to some unmeasured variable). Ideally, missing data should be Missing Completely at Random, as you’ll get the least amount of bias. A good tutorial on this distinction can be found in Graham (2009).

Littles MCAR test is an omnibus test of the patterns in your missing data. If this test is non-significant, there is evidence that your data are Missing Completely At Random. Be aware though, that it doesn’t necessarily rule out the possibility that data are Missing at Random – after all, if the variable wasn’t in the model, you’ll never know if it was important.

5. How might I report this missing data strategy in a paper?

I suggest something like the following:

“Overall, only 0.001% of items were missing from the dataset. A non-significant Little’s MCAR test, χ2(1292) = 1356.62, p = .10, revealed that the data were missing completely at random (Little, 1988). When data are missing completely at random and only a very small portion of data are missing (e.g. less than 5% overall), a single imputation using the expectation maximization algorithm provides unbiased parameter estimates and improves statistical power of analyses (Enders, 2001; Scheffer, 2002). Missing data were imputed using Missing Values Analysis within SPSS 20.0

Supplementary Resources

Enders, C. K. (2001). A primer on maximum likelihood algorithms available for use with missing data. Structural Equation Modeling, 8, 128-141. doi: 10.1207/S15328007SEM0801_7

Graham, J. W. (2009). Missing data analysis: Making it work in the real world. Annual Review of Psychology, 60, 549-576. doi: 10.1146/annurev.psych.58.110405.085530

Scheffer, J. (2002). Dealing with missing data. Research Letters in the Information and Mathematical Sciences, 3, 153-160. Retrieved from http://equinetrust.org.nz/massey/fms/Colleges/College%20of%20Sciences/IIMS/RLIMS/Volume03/Dealing_with_Missing_Data.pdf

36 replies on “Single Imputation using the Expectation-Maximization Algorithm”

Thank you so much. It was a great help

Hello,

Is it correct to include your dependent variable as well?

Thank you!

Greetings Karina

Hi Karina. Like many issues in statistics, it’s a contentious issue. Some say you should not, and others say it’s okay. As some general advice from my perspective, if you’re already meeting the requirements for EM assumption (i.e., < 5% missing data that is MAR or MCAR) then it probably won't matter much if you choose to impute your DVs or to delete them. Really, in this non-severe missing data case it doesn't matter all that much what method of handling missing data you choose. So if you meet those assumptions, you could probably impute values on the DV with this method to maximize power without much consequence. As missing data increases though, you need more sophisticated methods such as multiple imputation or a full information maximum likelihood method, and the issue of imputing on DVs becomes more contentious. For a brief intro to the topic of imputing DVs, see this article: https://www.amstat.org/sections/srms/proceedings/y2010/Files/400142.pdf

Sean, thanks for the helpful article. SPSS will only impute quantitative variables using the EM method. Any recommendations on how to get around this? I have missing data for a few categorical/nominal variables.

I’ve heard of people running the algorithm and just rounding to whole numbers (e.g., 1.3546 becomes 1), but I can’t speak to how biased this is as I’ve not read a scholarly paper on this. I would try the “multiple imputation” procedure in SPSS. If memory serves, you can specify missing values as categorical with that method. Truth be told, multiple imputation is a better procedure all-around … it’s just a pain in the analysis stage, because you have to aggregate over multiple datasets.

If you do use multiple imputation, what is the best way to aggregate the scores? (i.e. if in SPSS you need to use a function that does not automatically analyse the pooled imputations like PROCESS?)

Hi Amy. No easy answer, because it can vary depending on the analysis you’re doing. If you’re using multiple imputation in SPSS, it will automatically pool data for some statistics. If that’s the case for what you’re doing, go with that. If not … you may need to learn some R. Check out the MICE package, for more complex problems:

http://www.stefvanbuuren.nl/publications/mice%20in%20r%20-%20draft.pdf

Thanks for the good directions above. If I have categorical data that have been dummy coded into 0 and 1 binary responses, and have missing responses or if I have ordinal data and I have missing data, can I use Littel’s test to determine if I have MCAR or NMAR data? or is this just something that can be done with quantitative data. If I just have all categorical data, I cannot get the EM estimate box to open. I have played running the categorical and ordinal data with it in the quantitative box on SPSS 22, and it runs, but not sure that the output is reliable. Thanks in advance.

Hi Barbara. I’m pretty sure that SPSS can accommodate categorical variables as predictors of missing data in the Missing Values Analysis procedure, and little’s MCAR test will be fine for that. However, imputing categorical variables is a whole other can of worms. So far as I know, the Missing Values Analysis procedure in SPSS can’t impute categorical variables. Thus, if you put in ONLY categorical variables, no imputation would be taking place (which is why the EM window won’t pop up). So, categorical variables can be used as predictors in SPSS, but it doesn’t replace missing data for them. Missing data imputation methods for categorical data lag behind methods for continuous data, it seems. At the moment, it does look like multiple imputation or stochastic regression are decent solutions for categorical data (albeit harder to implement), see here:

http://www.jdsruc.org/upload/JDS-612%282010-7-1142936%29.pdf

Hi Sean, thanks so much of your post. I found it very useful. I was wondering what you recommend if there is more than 5% missing data for a particular variable? I have 8-10% missing data for a number of my independent variables. Thanks again.

It might depend on the analysis you’re doing, but in SPSS the best approach is something called “Multiple Imputation.” That can be used for more substantial missing data problems. Alternatively, you can analyze the data using structural equation modelling software (e.g., AMOS, Mplus, Lisrel) which uses something called Full Information Maximum Likelihood method which is another gold-standard approach. If you want a review, I’d suggest you read the article here:

http://www.stats.ox.ac.uk/~snijders/Graham2009.pdf

Hi Sean,

Thank you for this helpful post. May I know whether we can use this EM imputation technique for repeated measure stuy? For example we have 4 time points, baseline (N=100) post-intervention (N=90), 6 months postintervention (N=80) and 12 months post-intervention (N=70). Meaning that 30 subjects missed at least 1 timepoint measuring the outcome variables.

Thanks!

Hi Wan. No, I would not use this technique in the example you give. This single imputation approach is probably only useful for situations where you have less than 5% missing data. In your case, the best thing to do will be use a maximum likelihood approach. So, analyze your data using a multilevel model or structural equation modeling. These approaches handle missing data in a more sophisticated way without needing a separate imputation process. I have some slides on growth curve analysis that might be helpful:

http://www.slideshare.net/smackinnon/growth-curves-in-spss-a-gentle-introduction

[…] http://savvystatistics.com/emimpute/ […]

Hi Sean,

I don’t know if you are still checking this post but I will ask anyway.

I ran an EM to find missing data for likert-scale question. The imputed data that I obtained using SPSS software was not whole numbers. I have rounded-off these values by excluding any decimal places in the data table, but one of the values rounds-off to 0. A value of 0 is impossible as the likert-scale only goes from 1-5. Is there anyway to restrict imputed values to between 1 and 5?

Thanks in advance.

Hi Natalia. If you want to do that, then you probably just do two steps. First, do your imputation. Then, use the RND function in SPSS syntax to round to whole numbers:

http://www.spss-tutorials.com/spss-main-numeric-functions/

That said, I wouldn’t do that because it will reduce the effectiveness of the EM imputation. It will work best if you just keep the decimal numbers, even if they are impossible values for a Likert scale.

Hi Sean!

You suggested not rounding decimal numbers after EM. However what to do when obtained results are negative values or exceed the numbers determined by likert scaled questions (e.g., -4 or 8 and results on Likert scale is from 1 to 7)

Thanks !

Hi Mirjana. So far as I know, I think the best present practice is to leave in out-of-range values when imputing. So no rounding or correcting values to be within the original range of the scale. I’m basing this reasoning on this paper:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4021274/

That said … if you were getting a wildly out-of-range value (like the -4 example) something may have went wrong with the imputation process. For instance, you didn’t meet the missing at random assumption, then the imputation process can’t come up with good values for imputation (i.e., it only works if some variables in the model can actually predict missingness). Also, multiple imputation is often a better option than EM imputation.

Thank you very much for quick reply and help and article ! Probably I will follow your advice and do eventually multiple imputation, however I want to be sure also how to work with EM. That said, I just have one more questions. Since EM does not impute data for categorical variables (in my case gender), what can I actually do with those missing data, or what usually researchers do with them. Would it be ok to treat them as a third category (i.e., male – 0, female – 1, missing values – 2?

Thank you!

Honestly, there isn’t much useful you can do other than leave them blank and use listwise deletion without moving to some other method. If the only categorical variable is gender, I would first try to figure out what those values should be through some other method (e.g., inspecting consent forms or other paper trails to identify the sex of the participants). O

This was so helpful! Thank you

Hi Sean,

I used EM in SPSS for my dependent variable that had 7% missing. Now when I run logistic regression spss tells me my dependent variable has more than two non-missing variables…but it does not..it is binary…and needless to say now it will not run. Do you know how to fix this?

Other question is I was given a variable that has. Een changed to t scores and need to see if this variable effects my dv in addition to other variables. Would I just add the t score variable to the model OR is that a different test?

Hi Lyn. If you imputed a binary outcome variable with EM, likely some of the imputed values are decimal values (e.g., 1.01). So it is no longer binary. Inspect the variable with frequencies, and by manually looking at each value in the column. For your logistic regression to work, the outcome needs to be binary. If you’re getting that error message, the dependent variable must have more than just two possible values.

Not sure how to answer your second question; not sure what you are doing.

Hi Sean,

Just wondering what my options are if I have missing values on True/False measures? If I’m understanding the comments correctly, I can’t use EM to impute binary variables? I need to keep as much data as I can so listwise deletion is not an option for me…

Thanks!

Hi Michelle. Well, if you’re going to sum the true/false measures into a total score, you can always impute the total score instead of the raw items. Otherwise, imputing categorical variables is actually a bit harder. You’ll probably need to use the multiple imputation procedure in SPSS, which is generally a better approach anyway.

If there’s only a small amount of missing data (which, if you’re thinking on using this EM approach at all, there should be) the simple solution is to use listwise deletion. It loses a bit of power, but is relatively unbiased.

How could I implement the e-m algorithm on the excel in order to find some values which miss on a questionnaire?

Thank you

As far as I know, you can’t use excel for this. It doesn’t have the option. You’ll have to use a dedicated statistics program, such as SPSS or R.

Indeed I can’t find anywhere but this is the program we work on my job. We did e-m algorithm on spss on my studies but how else can I replace missing values on excel? Suppose we have a questionnaire and there are missing values based on criteria that can’t take into account based on the survey. One way is to delete completely missing value and do not take into account so it will be dropped automatically from the sample and we find the average with one less category, but is it reliable given that the sample includes 66 or 72 observations and missing values could be from 0 to 6 on a category? sample is like this grades -200,-100,0,100,200, discrete

0 200 200 100

200 100 -200 100

-200 -100 200 -200

100 200 100 200

0 100 0 100

100 0 -200 200

200 100 200 100

200 100 -100 0

200 200 200 -200

200 200 200 200

? -200 ? ?

200 200 100 200

thank you

If you’re using excel, the safest thing to do is listwise deletion. So drop all the cases with incomplete data. This does reduce statistical power, but is generally unbiased. Sorry, excel doesn’t have very many useful methods for what you are doing.

Hi sean. Thank you for your helpful post. I have data which DV have missing observation but IV have no missing observation. Should I input all variables into MVA in SPSS? Or i just input DV as quantiative variable? But if i just input DV as quantiative variable, the esult of dataset EM gives the same value for each missing observation. Thank you for sharing

Hi sean. Thank you for your helpful post. I have data which DV has missing observation but IV has no missing observation. Should I input all variables into MVA in SPSS? Or i just input DV as quantiative variable? But if i just input DV as quantiative variable, the result of dataset EM gives the same value for each missing observation. Thank you for sharing

Hi Listya. You should probably put in your IVs, along with any other supplementary variables that predict missing data (even if they are not used in your final models). If you just put in 1 variable, the algorithm won’t work well.

Hi sean, thank you for your comment. Should missing data on the dependent variable be imputed?

Hi Listya. Some people disagree, but in general yes it’s fine to impute the DV. You shouldn’t be doing this method at all though, if you have more than ~5% missing data.

Thank you very much for this valuable insight!

Dear Sean, thank you for this nice tutorial! I don’t know if you still reply on this post, but I am having a question. I have various items on a likert scale from 0 to 4 and a few categorial variables, e.g. gender. After running the EM imputation, I have numbers with decimals like 2,45. Is it fine if I just round the values, so that I can continue my analysis? Or is there another possibility to deal with decimals?

At least for descriptive statistics I need whole numbers (just setting the format to no decimals doesn’t help here, I already tried that).

Thank you for your response!