In this post, I outline when and how to use single imputation using an expectation-maximization algorithm in SPSS to deal with missing data. I start with a step-by-step tutorial on how to do this in SPSS, and finish with a discussion of some of the finer points of doing this analysis.

1. Open the data-file you want to work with.

2. Sort the data file by ascending ID or Participant number. This is critical; if you do not do this, everything you do subsequently could be inaccurate. To do this, right click on the ID column, and click “sort ascending”

3. Open the Syntax Editor in SPSS:

4. Copy and paste the following syntax into the Syntax Editor, adding in your own variables after MVA VARIABLES, and specifying a location on your computer after OUTFILE. .Also, note that .sav is the file extension for an SPSS file, so make sure it ends in that.

MVA VARIABLES=var1 var2 var3 var4 var5

/MPATTERN

/EM(TOLERANCE=0.001 CONVERGENCE=0.0001 ITERATIONS=100 OUTFILE=’C:\Users\Owner\Desktop\file1.sav’).

5. Highlight all the text in the syntax file, and click on the “run” button on the toolbar:

6. This will produce a rather large output file, but only a few things within are necessary for our purposes: (a) Little’s MCAR Test and (b) whether or not the analysis converged. Both can be found in the spot indicated in the picture below:

(a) If Little’s MCAR test is nonsignificant, this is a good thing! It means that your variables are missing Completely at Random (see #4 in FAQ).

(b) This second message is an error. It will only pop up if there is a problem. If you don’t find it at all in the output, it’s because everything is working properly. If this message DOES pop up, it means that the data imputation will be inaccurate. To fix it, increase the number of iterations specified in the syntax (e.g. try doubling it to 200 first). If that doesn’t work, try reducing the number of variables in your analysis.

9. The syntax you ran also saved a brand new datafile in a location you specified above. Open that datafile.

10. If everything went well, this new data file will have no missing data! (You can verify this for yourself by running analyzeàFrequencies on all your variables). However, the new datafile will ONLY contain the variables listed in the syntax above. If you want to have these variables in your master data file, you will have to merge the files together.

Merging the master file and the file created with EM above

11. In the data file created with the above syntax, rename every variable. Make it simple, something like the following syntax:

RENAME VARIABLES (var1 = var1_em).

You are doing this because you do not want to overwrite the raw data with missing values included in the master data file.

12. Next, add an ID number variable (representing the participant ID number) that will be identical to whatever is in your master file (including variable name!). You’ll need this later to merge the files. If you sorted correctly, you should be able to copy and paste it from the master file.

13. Make sure both the master data file and the new data file created with the above syntax are open at the same time. Make sure both files are sorted by ascending ID number, as described in step 2. I can’t stress this enough. Double check to make sure you have done this.

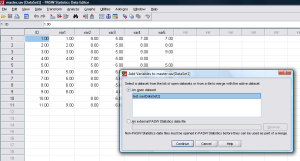

14. In the master file (not the smaller, newly-created file), Click on Data –> Merge Files –> Add Variables

15. Your new data set should be listed under “open datasets.” Click on it and press “continue”

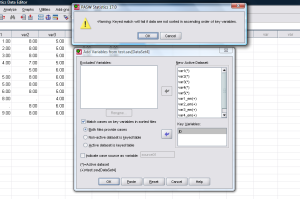

16. In the next screen, click “match cases on key variables in sorted files,” and “Both files provide cases.” Place “ID” (or whatever your participant ID number variable is) in the box “key variables.” Then click okay. You will get a warning message; if you sorted the data files by ID number as instructed, you may click “ok” again to bypass the warning message.

17. The process is complete! You now have a master dataset with a set of variables with the missing data replaced as well as the raw data with the missing data still included. This is valuable to make sure that you aren’t getting drastically different results between the imputed data and listwise deletion. When conducting your analyses, just make sure to use the variables that have no missing data!

FAQ

1. How does the EM Algorithm impute missing data?

Most of the texts on this topic are very complex and difficult to follow. After much searching on the web, I found a useful website which explains the conceptual ideas of EM in a very easy-to-understand format (http://www.psych-it.com.au/Psychlopedia/article.asp?id=267). So check this website out if you want to know what’s going on “under the hood.”

2. When should I use EM?

Generally speaking, multiple imputation (MI) and the full-maximum likelihood (FIML) methods are both less biased, and in the case of FIML, quicker to implement. Use those methods wherever possible. However, sometimes the EM approach is useful when you want to create a single dataset for exploratory analysis, and the amount of missing data is trivial. It’s also sometimes useful to overcome software limitations at the analysis stage. For example, bootstrapping cannot be performed in AMOS software with missing data using the default FIML approach. Moreover, there is often no agreed-upon way to combine results across multiply imputed datasets for many statistical tests. In both of these cases, a single imputation using EM may be helpful.

As a rule of thumb, only use EM when missing data are less than 5%. If you have more missing data than this, your results will be biased. Specifically, the standard errors will be too low, making your p-values too low (increasing Type I error).

3. Which variables should I include in my list when imputing data?

This is a tricky question. If you read tutorial on EM in #1 above, you will have an understanding that the EM algorithm imputes missing data by making a best estimate based on the available data. Long story short, if none of your variables are intercorrelated, you can’t make a good prediction using this method. Here are a few tips to improve the quality of the imputation:

a) Though it’s tempting to just throw in all of your variables, this isn’t usually the best approach. As a rule of thumb, do this only when you have 100 or fewer variables and a large sample size (Graham, 2009).

b) If you’re doing questionnaire research, it’s useful to impute data scale by scale. For instance, with an 8-item extraversion scale, run an analysis with just those 8 items. Then run a separate analysis for each questionnaire in a similar fashion. Merging the data files together will be more time-consuming, but it may provide more accurate imputations.

c) If you want to improve the imputation even further, add additional variables that you know are highly correlated (r > .50) with your questionnaire items of interest. For example, if you have longitudinal data where the same variable is measured multiple times, consider including the items from each wave of data when you’re imputing data. For instance, include the 10 items from time 1 depression and the 10 items from time 2 depression for a total of 20 items.

4. What does Little’s MCAR test tell us?

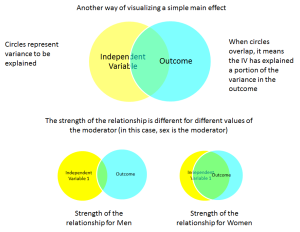

Missing data can be Missing Completely at Random (i.e., no discernible pattern to missingness), Missing at Random (i.e., missingness depends upon another observed variable), or Missing Not At Random (i.e., missingness is due to some unmeasured variable). Ideally, missing data should be Missing Completely at Random, as you’ll get the least amount of bias. A good tutorial on this distinction can be found in Graham (2009).

Littles MCAR test is an omnibus test of the patterns in your missing data. If this test is non-significant, there is evidence that your data are Missing Completely At Random. Be aware though, that it doesn’t necessarily rule out the possibility that data are Missing at Random – after all, if the variable wasn’t in the model, you’ll never know if it was important.

5. How might I report this missing data strategy in a paper?

I suggest something like the following:

“Overall, only 0.001% of items were missing from the dataset. A non-significant Little’s MCAR test, χ2(1292) = 1356.62, p = .10, revealed that the data were missing completely at random (Little, 1988). When data are missing completely at random and only a very small portion of data are missing (e.g. less than 5% overall), a single imputation using the expectation maximization algorithm provides unbiased parameter estimates and improves statistical power of analyses (Enders, 2001; Scheffer, 2002). Missing data were imputed using Missing Values Analysis within SPSS 20.0

Supplementary Resources

Enders, C. K. (2001). A primer on maximum likelihood algorithms available for use with missing data. Structural Equation Modeling, 8, 128-141. doi: 10.1207/S15328007SEM0801_7

Graham, J. W. (2009). Missing data analysis: Making it work in the real world. Annual Review of Psychology, 60, 549-576. doi: 10.1146/annurev.psych.58.110405.085530

Scheffer, J. (2002). Dealing with missing data. Research Letters in the Information and Mathematical Sciences, 3, 153-160. Retrieved from http://equinetrust.org.nz/massey/fms/Colleges/College%20of%20Sciences/IIMS/RLIMS/Volume03/Dealing_with_Missing_Data.pdf